Introduction

The rapidly evolving AI era presents new discoveries daily, particularly in software engineering, where AI assistants are now integral to our workflows. While these innovations have boosted productivity, they also bring challenges like:

- Reliance on cloud-based services and closed models 🛅

- Privacy and security concerns 🔒

- Other barriers to entry 🚧

The Challenge of Cloud-Based and Proprietary Models

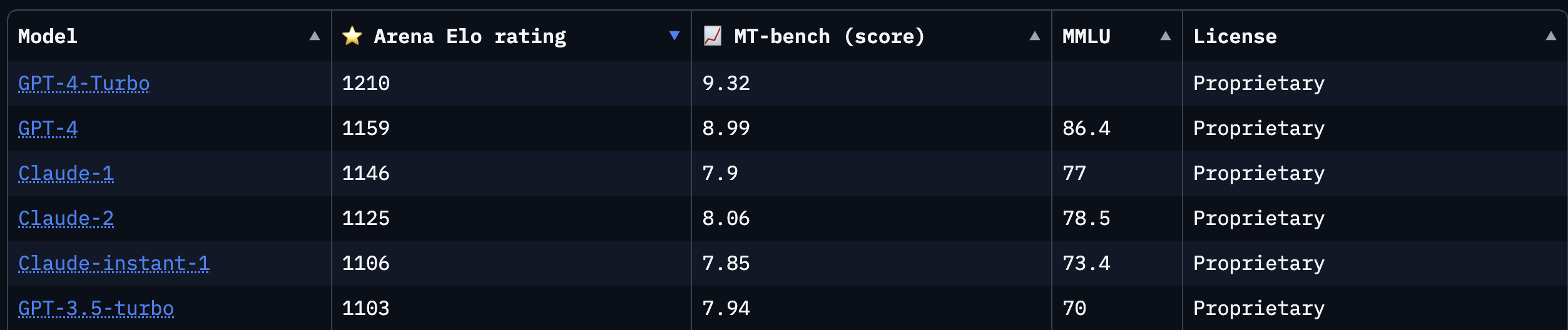

According to the Chatbot arena leaderboard the top entries with high Elo ratings are all proprietary models.

This is not surprising, as the best models are trained on massive datasets and require significant compute resources. However, this means that the best models are not available to experiment and iterate with as either you will need to pay for access and/or rely on a online workflow.

Privacy and Security Concerns

Using cloud-based models or services often involves sending data to third parties, raising significant privacy and security concerns.

Overcoming Barriers to Entry

The cost of model access and the need for GPUs present significant barriers, especially for beginners or those wanting to experiment without substantial investment.

The Advantages of Local Large Language Models (LLMs)

Local LLMs emerge as an ideal solution, offering privacy, security, and lower barriers to entry. I explored several options, like:

All of the above are great projects and can run the top open-source models locally. I experimented with my MacBook Pro (with and without M1 chips) and in both cases the performance was great so was the ease of setup. I found Ollama to be the easiest to setup and use. It also can multiplex between multiple models. Ollama has a very Docker like feel to it and I found it very easy to use. Ollama also exposes a REST API which makes it easy to integrate with other tools.

Building a Browser Sidekick

Now that the barrier to entry is removed, I got thinking about how to leverage these models to assist with day to day tasks. After thinking for a while I landed on the idea of building a browser sidekick. Since the browser has access to both private and public content it is a great place to have a local LLM. The idea is to have a local LLM available in the browser via a browser extension that can assist with tasks such as:

- Summarization of web pages

- Answering questions about the web page

- Answering questions about local documents

- Chatting with the LLM from the browser

One of the inspiration for this was Brave Leo AI

DistiLlama

After a lot of prototyping and experimentation I landed I had a working extension, now the hard part was what to name it after a bit of word play and as a homage to the Llama family of LLMs I landed on the name DistiLlama

After a lot of prototyping and experimentation I landed I had a working extension, now the hard part was what to name it after a bit of word play and as a homage to the Llama family of LLMs I landed on the name DistiLlama

DistiLlama is primarily built with Ollama, Langchain, Transformers.js and Voy

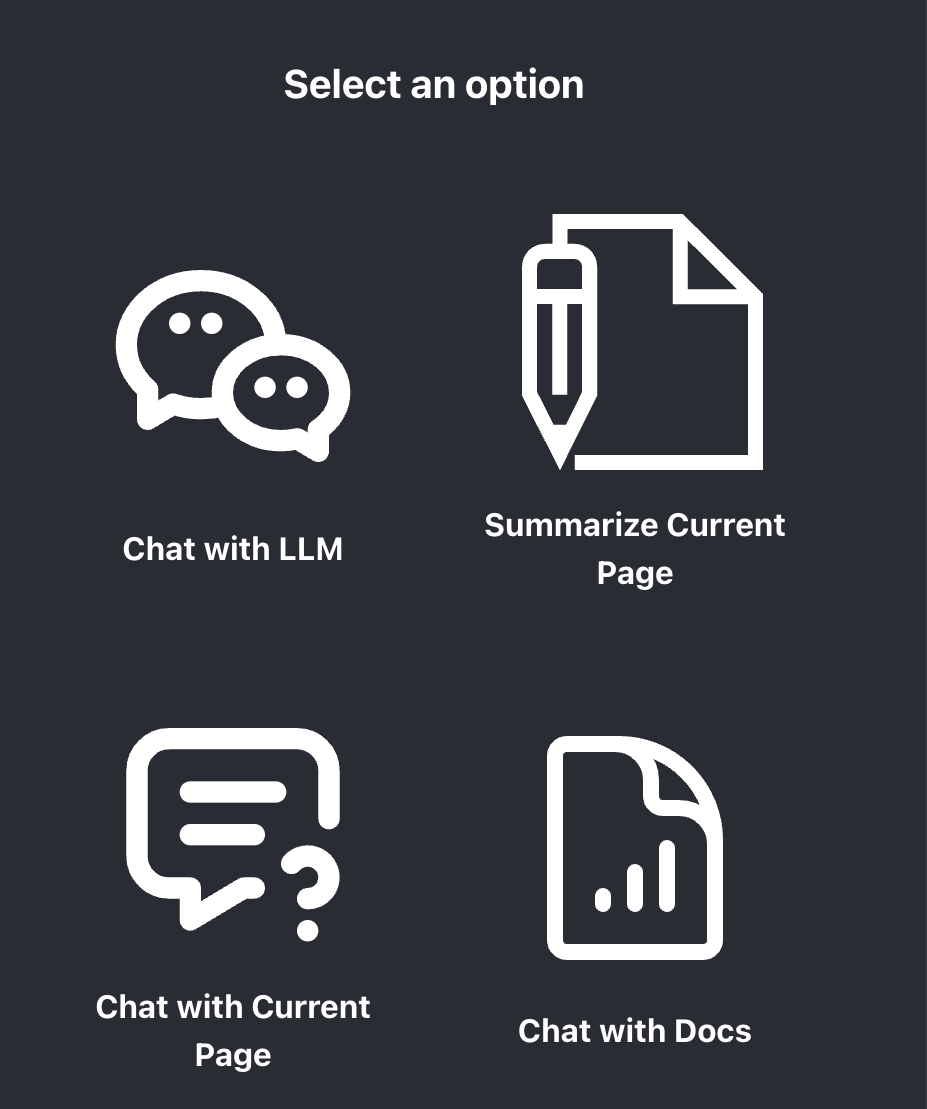

Here is the main menu of the DistiLlama extension side panel:

Here is a demo of DistiLlama in action:

Conclusion

Check out DistiLlama on GitHub and let me know what you think. It has certainly helped me with my daily productivity and I hope it helps you too.

More experiments are more tools are coming soon. Stay tuned 😄