Imagine if, just like the Avengers, a team of specialized AI agents could come together, each with its unique strengths, to tackle complex problems. This isn’t a scene from a sci-fi movie; it’s the reality of modern artificial intelligence. In the world of AI, assistants and agents work in unison, much like a well-coordinated superhero team. Each AI agent, equipped with specific skills — be it data analysis, autonomous decision-making, or predictive analytics — joins forces to form a formidable swarm, ready to take on tasks ranging from mundane to monumental. In this post, we’ll delve into how these AI ‘heroes’ are assembled and how they’re revolutionizing our approach to problem-solving, just as the Avengers revolutionized superhero teamwork.

Artwork Generated by DALL·E 3

Artwork Generated by DALL·E 3

AI Assistants and Agents

In the dynamic world of AI, the terms ‘assistants’ and ‘agents’ are often used interchangeably, yet they represent distinct modalities in artificial intelligence. AI assistants, like the one you’re interacting with now, excel in roles akin to a co-pilot, offering guidance and support based on user inputs. They are adept at understanding and responding to specific queries, making them invaluable for tasks that require direct interaction and immediate feedback.

Conversely, AI agents operate with a degree of autonomy that sets them apart. These agents are programmed to make decisions and take actions without human intervention, often in complex environments. This autonomy enables them to manage tasks, learn from interactions, and even predict needs, making them ideal for scenarios where continuous oversight isn’t feasible.

Understanding these nuances is key to appreciating AI’s diverse capabilities and potential applications in our ever-evolving digital landscape.

In this post we will look at the use case for AI assistants and agents and will build a AI agent swarm that can help with a task that many individuals perform routinely.

We will utilize tools, knowledge and local Large Language Models (LLMs) to build this swarm.

Use cases for AI assistants and agents

AI assistants

- Personal Productivity: Assisting with scheduling, email management, and calendar organization.

- Customer Support: Handling queries, providing information, and guiding users through processes.

- Information Retrieval: Searching for and summarizing information, and providing recommendations based on user preferences.

- Educational Support: Offering tutoring, language learning, and personalized learning assistance.

- Healthcare Assistance: Reminders for medication, scheduling appointments, and providing health-related information.

AI agents

- Autonomous Decision-Making: Making independent decisions in complex environments, such as in autonomous vehicles or smart home systems.

- Predictive Analytics: Analyzing data to predict trends, customer behavior, and maintenance needs in industries.

- Customer Interaction: Managing customer interactions more autonomously, often in retail or hospitality.

- Security and Surveillance: Monitoring environments and detecting anomalies without human intervention.

- Smart Manufacturing: Automating and optimizing manufacturing processes, and managing supply chains.

Building a AI agent swarm to assist in routine activities

This is a real world inspired scenario where we will build a AI agent swarm to assist with routine activities. This is a simplistic representation of a recurring task where many individuals are faced with:

Compiling a list of tasks that a individual worked on in a given time period and highlighting the work that was done. Such a report is often useful to present to managers, team members and other stakeholders.

In this case we will use the following personas for the agents:

- Jira agent

- Communication agent

- File writer agent

The goal in this case is to build a AI agent swarm that can produce a report of the work done by a individual in a given time period and format it in a way that is easy to consume.

Frameworks

Looked at candidate frameworks that support multi-agent execution for building the agents and found the following:

AutoGen is a framework that facilitates the development of next-generation Large Language Model (LLM) applications by enabling multiple agents to converse with each other and solve tasks. It supports diverse conversation patterns and provides a collection of working systems from various domains. AutoGen agents are customizable, conversable, and allow human participation in their operations.

It simplifies orchestration, automation, and optimization of complex LLM workflows, maximizing the performance of LLM models. It also provides enhanced LLM inference with utilities like API unification and caching.

CrewAI is a cutting-edge framework designed for orchestrating role-playing, autonomous AI agents. It enables AI agents to assume roles, share goals, and operate as a cohesive unit. This framework is suitable for a variety of applications, including smart assistant platforms, automated customer service ensembles, and multi-agent research teams. Offers role-based agent design, autonomous inter-agent delegation, and flexible task management. Its role-based design allows agents to have specific roles and goals, enhancing problem-solving efficiency.

Langdroid Langroid, developed by researchers from CMU and UW-Madison, is an intuitive, lightweight, extensible Python framework for building LLM-powered applications. It allows the setup of agents with optional components, assigning them tasks for collaborative problem-solving. Supports multi-agent collaboration, retrieval augmented question-answering, and function-calling. Langroid is modular, supports caching of LLM responses, and has a focus on grounding and source-citation. It supports a wide range of LLMs, including local/open or remote/commercial models.

While there are many frameworks available for building multi-agent systems, my selection process focused on those that can use custom system/user prompts or different models for each agent. An essential criterion was the ability to leverage local Large Language Models (LLMs) and run on a local machine, aligning with the privacy and security advantages discussed in my previous Local LLM post. After conducting initial experiments, I found CrewAI to be particularly suited for our needs. It’s not only lightweight but also demonstrates an impressive ability to easily integrate with local LLMs, making it an ideal choice for our project where accessibility to content behind a firewall is crucial.

Building with CrewAI

- I used Ollama to run local LLMs.

- To build with CrewAI I defined the agents one by one giving them role, goal and backstory along with necessary tools.

- In my experimentation I found that local models needed tweaks as defined here I used

Observationas my stop word. - Among the different models

mistral-instruct-7bv2 worked the best for this use case. - I wanted to use different model for each agent but ended up using mistral for all agents as it worked the best.

- I also used Jira toolkit from Langchain as it works really well with CrewAI. Eliminating the need to write custom tools for Jira agent.

- The code for this example is available on GitHub along with the instructions to run it.

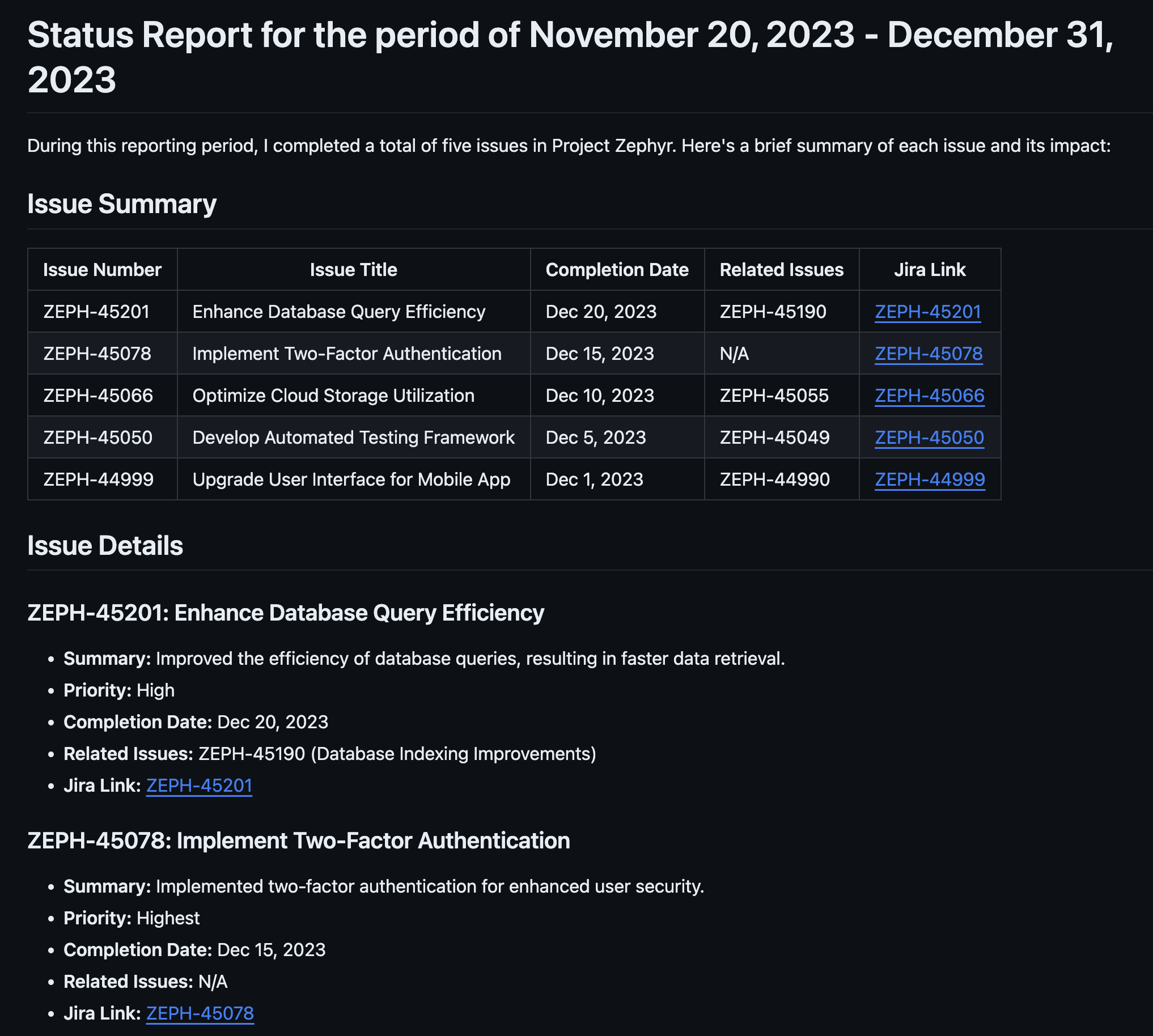

Here is a sample report

As you can see without a lot of instruction and upfront coding the agents were able to figure out the tool usage, Jira API query and produce markdown. This is a very straightforward example but it shows the power of multi-agent systems and how they can be used to solve complex problems all with the security and privacy of local LLMs.

I plan to experiment more with CrewAI and other frameworks to build more complex agents and agent swarms that may be fully autonomous or may require human intervention in the loop. I am also thinking about the possibilities that such systems can enable in the future.

Stay tuned for more on this topic.

In the mean time checkout the following examples: